Murray Woodman (Managing Director, Morpht) and Jennifer Cox (User Experience, TGA ) talk about the website personalisation that was developed for the TGA website.

The presentation was received with interest and garnered some questions, most of which were addressed at the Meetup. If you've missed out, here are the Q&As.

Why personalisation?

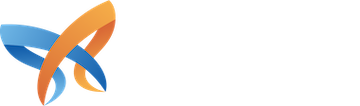

Personalisation improves user engagement and satisfaction. Users expect sites to be personalised for them and will be more likely to purchase, repurchase and recommend to their friends. In the case of government sites, which tend to be more inofmration based, user satisfaction is improved via easy pathways to important content and transactional forms.

© 2021 McKinsey & Company research.

A task driven approach

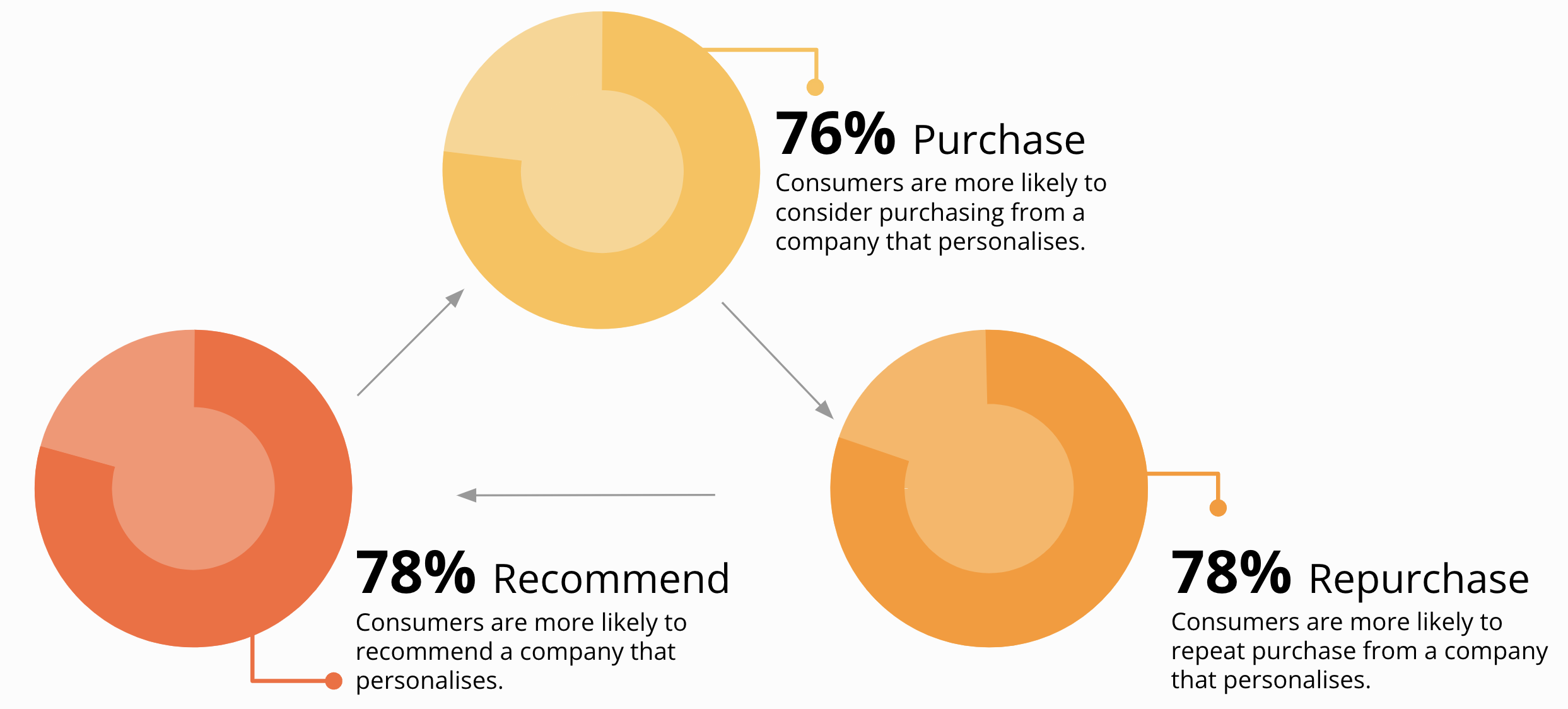

A user profile was developed for a number of dimenstions including favourite audience, topic, product type and service. These dimensions were used to personalise content. The primary means of doing this was through task cards for each item in each dimension. For example, each audience on the site was associated with a set of key tasks to perform. There were delivered as cards to the user.

In the example below we see a set of cards for the "medicine" product type. This is shown on the homepage for sponsors who have an affinity for the medicine product type.

Next best step - a call to action

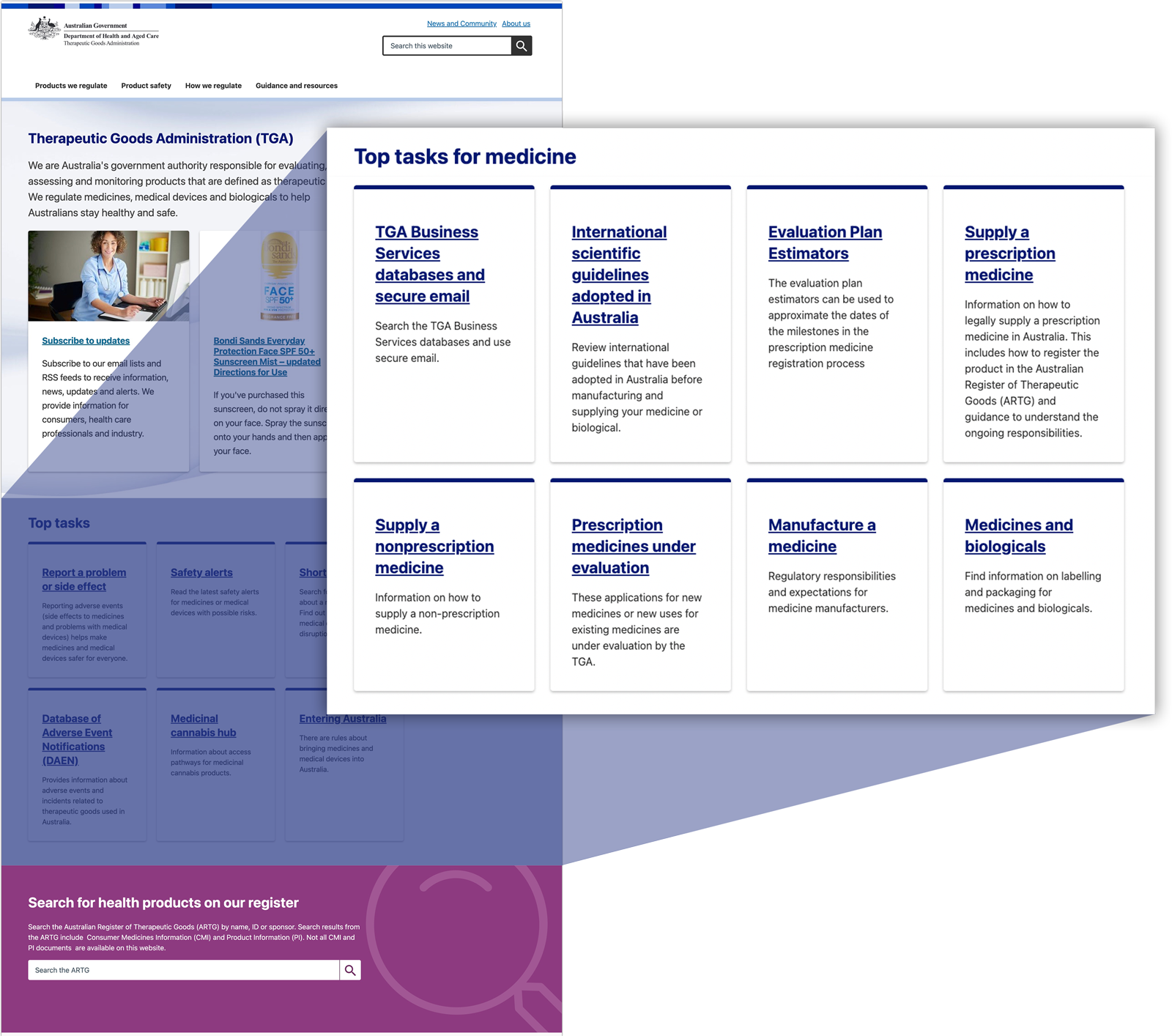

The user profile also tracks a number of variables which are used to determine the next best step for a user. This is done via a cascading rule set for the current intent, outcome, campaign or stage a user is on. From time to time users will pick up these time limited (1 hour) attributes so that personalisated CTAs can be displayed to them.

In the example below, the user has picked up the "new ingredient" intent, most likely by completing a decision tree or hitting a specific page. Once the intent has been derived a CTA can be shown pushing the user through to the transactional page. After clicking on the CTA, the intent has been satisfiued and the CTA will not be shown on subsequent page loads.

Conclusion

The TGA site is complex with a range of audiences with different goals. A personalisaed approach to the delivery of content, tasks and CTSa has allowed the site to support site visitors and the navigate the site and undertake tasks.

Questions from the audience

We have different audiences coming onto a page because they are interested in the content in general. How do you handle audience-specific content on a page which may be accessed by multiple audiences?

This is a very interesting and not uncommon problem. It can happen when content is organised along the lines of a “topic” that can be of interest to multiple audiences. This can be a difficult problem to solve and can lead to several possible solutions:

- Ignore it - Topic is primary. If the topic page is standard on its own and is outside the site hierarchy, then this is a possible solution.

- Ignore it - Slot the topic into the best place.

- Duplicate the content and provide different versions for the audiences. This tends to happen when the site hierarchy is dominated by the audiences.

- Do not duplicate the content but do duplicate the menu item. This is a poor solution because one resource the topic, is available in two places but on a single URL which is confusing.

All of these options have been observed in the wild on Government sites. In each case, a pragmatic solution has been taken depending on the nuances of the site.

An alternative solution that may apply to sites that have a predominantly topic-based approach, would be as follows.

- Create a topic as desired and place it into the hierarchy as needed.

- Provide a “General” view of the topic by default. This could include generic introduction text and unfiltered listings.

- Provide the user the ability to customise the audience view for that page by selecting an option from a list. This preference could then be saved.

- Filter the content for the audience if the user has so opted.

This “Topics by audience” approach to “personalising” the content is a viable and elegant solution for sites that have a topic focus but need an audience tilt to the way data is.

Have you tried personalising with Optimizely.

No. The personalisation we created for the TGA site uses tools available within the Drupal website itself. The TGA solution has all of the logic, promotions, tasks and assets stored in the CMS. There is no need to integrate with or manage multiple systems to deliver personalisation.

How do you handle users on shared devices? Can a library computer have a meaningful profile?

User behaviour is tracked in the user's browser. Over time a profile is built up for the “top” audiences, topics, product types and services. If the computer was being used by several people with different interests, the aggregated and summarised data would not provide a clear representation of the interests of the individual using the computer, at that point in time.

It must be said that it would be expected that shared computers in public spaces such as a library should start new user sessions so that data is not leaked between users. So in many cases, this should be a non-issue.

There are many parts of the TGA solution which are not based on the summarisation of aggregate data. For example, a user visiting an individual topic or service, or coming in on a specific campaign, can trigger a short-lived, time-based “intent” to perform a certain task. In these cases, the intent will overrule the underlying more popular values. In these cases, the system will adapt to the tasks the current user is undertaking.

If past browsing history is a factor, what happens when a user comes to the site for a different or new task?

Yes, past browsing history is a factor. When viewing a page, the metadata on that page (topic, audience, product type, service, intent) is used to help build the user profile. All of these aspects are taken into account when personalising the content.

When a user is undertaking a new task we make use of the “intent” concept where a user is shown a time-based promotion to complete a certain task, e.g. "Find out about a service", "complete a form", or "research more" on a subject area. These time-based intents last for an hour (or as configured for the site). The site therefore adapts for a short time to the new intent so that the “next best step” call to action at the bottom of each page will show what is considered to be the most relevant item based on recent activity.

If the personalisation engine gets it wrong have you built in a mechanism for the user to easily correct it and find what they need?

It’s an interesting question as to what “getting it wrong” means. As user experience designers and site builders, we all try to build systems that best help users achieve their goals. This applies to static sites and good architectures and wayfinding, as well as personalised sites with custom content areas. In both cases, the aim is to support users in their activities - point them in the right direction but also provide options for more general items if needed.

One of the principles we adopt is that it is important for users not to get trapped in an echo chamber and only see content that is deemed relevant to them. In the case of topics and services, it is possible to navigate to them by ordinary means. The promotional items shown to users point them in the right direction, but the more general options are also available.

A good example of this would be the homepage news. We could have decided to filter that by topic or some other dimension. This was not done because it was deemed important for all site visitors to see what was new and fresh on the site, no matter what their interests were.

How do you balance personalisation vs. generalisation in user journey design, in terms of unidentifiable cohorts and miscellaneous content categories?

Determining the interests of users is probably more of an art than a science. As individuals, we are all multifaceted, with many interests. We also change from day to day. So it is not possible to say that you have come up with a categorisation of a person that is in some sense true. We are doing our best to derive some general ‘tilts’ that can be used to improve the experience.

The system will allocate users to a single item for each of the dimensions of audience, topic, product type and service. The system is not attempting to ‘cluster’ users into cohorts. We are allocating dimensions to the user based on what they’ve interacted with. This has strengths and weaknesses.

The weakness, as you allude to, is that there may be groupings that are not recognised. This may be the case the owners of the site need to do further research to identify audience cohorts and tag content with the relevant dimensions to align with those cohorts.

The strength is that we have a named category for which we have concrete journeys to promote.

Putting that aside, we also do make use of the “intent” dimension as well. Any piece of content on the site can be associated with an intent, and that intent is then associated with one or more promotions. In this case, the actual categorisation of the user has no relevance. There is no need to assign the user to a cohort. This becomes purely about an interest in a particular task and getting served a promotion to guide you to further information about that task.

So we take a two-pronged approach. Assignment of characteristics (linked to tasks) and identification of intent (linked to CTAs).

With the affinity-based logic, how does this cater to a user if they change their behaviour or require a variation on their task or interest area?

A user’s affinity for a certain subject is determined through a simple method of counting the items with that interest. If behaviour changes over time, a new interest will eventually win out.

As described in other answers, we do take an intent-based approach as well so that certain actions will trigger a time-based intent which will override the affinity. This helps the system adapt and helps ensure that users are not stuck in the same old patterns and see the same old promotions and tasks.

What led you to personalization as the solution in the first instance? I’m interested in user research insights that led you there.

The TGA site has several distinct audiences with different journeys. The content is complex and has been organised by topic. In many cases, different audiences will be interested in consuming common topics. On the site, there was a desire to take a more topic-driven approach to information architecture, but also recognise the need for the different journeys the various audiences would undertake. On top of this, there were links to “authenticated” services that industry would be interested in. Moving industry users through to these services efficiently was a high priority.

To summarise, there was a need to deliver contextual tasks based on audience and a need to push users through to complete transactional services efficiently. Personalisation was chosen as a way to augment the IA of the site to help achieve these aims.

How does personalised content impact testing? Are there extra considerations if you were to use screenshot comparison tools or similar?

When there is no user context available, the system will tend to default to a “general” category. This means that first-time users will see neutral or introductory content. Testing systems, which rely on headless browsers, will not have the context and so they will receive the general or default page. This is what would be used in comparisons for tools such as Cypress.

Editors and site owners can test what a page may look like for different users through the use of override controls we made available at the bottom of the page. Those controls allow editors to define the various user attributes (audience-consumer, topic-covid, product-type- medicine) and then view the page to see what is displayed. This does allow for the road testing of new content or rules.

How do you handle FOI requests if the content is different for each user?

The same question could be asked of any FOI Request as it is not known which pages the user actually visited, whether the site was available or what was in their cache. ie. there will always be some unknowns as to the context of what may have happened on a certain date.

What is known though is the actual content of the site. In the case of the solution in place with the TGA there will continue to be a strong audit trail in place. All of the promoted content is stored in Drupal and it would be possible to know the exact tasks and promotions that were in place at the time. ie. the content could be known at a certain point in time. It is true that it will not be known which pieces of content an individual user saw, but this is also true of all websites in general which do not track user behaviour for individual clients.

How do you address privacy, when you are collecting personal information to serve a customised experience? How long is it stored, remains relevant?

On the TGA website, we do not collect any identity information. A client ID is randomly generated for internal reference. That ID is not used in analytics tracking or shared externally with other systems. All data stays on the user’s machine.

The data stays in the local storage of the machine. If a user clears cookies for the site, the local storage information will also be removed.

Have you considered AI to predict behavioural change?

The TGA solution uses a fairly simple way of categorising users according to behaviour. There are more sophisticated systems out there. For example, The Recombee AI Recommendation engine is available through GovCMS DXP. This system tracks item ID and client ID to form a DB of user behaviour which can be used to identify recommendations based on the “wisdom of the crowd” and other aspects such as freshness and popularity. This might be helpful on informational (articles, media) sites that have a large amount of content that is related.

You're picking a user's audience based on some browsing. What sort of testing did you do, how sure are you of low incorrect audience allocation?

This is an interesting area. During testing on the TGA site, the following approach was taken:

- Identify the audiences

- Identify a set of “query” terms for each audience which may be done in a search

- For each audience, search for each term to get a result set

- For each resultset, view the top 10 items

- Inspect the “audience” dimension in local storage

- Confirm that the correct audience has “won”

In other words, we have attempted to replicate expected behaviour for an audience to confirm that we are getting allocated to an audience correctly. This process actually validates these things:

- We have a clear idea of what each audience wants

- Search is working well and good results are shown

- The results have been correctly tagged with audience

During testing we did get pretty good results with correct winners. However, there were strong correlations between some audiences where editors had tended to select curtain audiences by default. This indicates the importance of applying tags only when relevant to “push up” items. This kind of metadata provides a much stronger signal and helps with characterising users - and filtering search results in facets.

From an archive perspective, how did you consider the content delivered to a user if it's variable?

I’m not sure what this question is driving at. The entire corpus (500K nodes) is available to all users through search and other datasets and listings. So it is fair to say that the bulk of the site operates in a fairly standard way. We are just trying to help users through to certain key pages for transactional service delivery and key information items.

What, if any user testing did TGA do prior to investing in the personalisation solution?

The personalisation project was done as a Phase 1, after an initial discovery period and Phase 1. A lot of discovery and user research was done in these initial phases. This work was focussed on the pathways to transactional services, key tasks and audiences. Research was conducted with surveys, treejack test and interviews. For the personalisation project, a customer survey was completed by many users identifying areas for consideration and possible concerns about how personalisation would be used.

Can a user opt out?

Technically this is possible if the system has been set up that way. It is possible to set a cookie value to stop the running of the personalisation rules on page load. On other projects, this has been integrated into a “cookie preferences” pop-up. On the TGA site, there is no such opt-out option but one could be added if so desired.

Considering that data stays on local storage and some data is sent to GA. Does the solution provide any analytics dashboard (for campaigns and such)?

The solution in place doesn’t send any data to Google Analytics. We use Matomo as an analytics package to store page view data on the site. Matomo is GDPR compliant and doesn’t store the IP address of the user. It therefore has a better privacy profile to Google Analytics which is not GDPR compliant.

The TGA does indeed run their own Google Analytics for tracking user behaviour and can therefore track page views, campaigns, funnels and the like. So these typical analytics tools are available as usual.

We recommend that clients continue with their current analytics package. The data being reported can be improved by making use of the local storage data we have for each user. For example, a custom GTM tag could take extra dimension data from local storage to improve the page view events inside Google Analytics.

Inside Matomo we keep track of the user characteristics as dimensions for each page view. This means that all page views can be reported on along the lines of audience, topic, product type, service and campaign. We also track the promotions that are clicked so we can see which promotions are proving to be most popular. These reports can be visualised as pie charts as well.

Is the theme javascript layer using a framework?

The theme and site structure are fairly traditional in that much of the rendering is done in PHP and is returned to the client from the server. However, the personalised components on the page are delivered using a decoupled approach. On the TGA site, and in Convivial for GoveCMS, there are “Personified” paragraphs that can take data from the local storage, and build a URL with the data as params which is used to hit a Druapl view JSON endpoint to return the required data (tasks, promo, etc). The returned JSON is then transformed with a client-side template to display the HTML to the user.

The user profile which is built by the Convivial Profiler JS library is used by client-side code to build up the customised content which is displayed to the user. This profile could be used by any front-end framework to build the page as required.

Pia (Andrews in a previous presentation) suggested users wanted to dial 'helpfulness' up and down. Do you give users visibility and control over their personalisation profile?

As described above, it is possible for users to opt in or out of personalisation depending on the preferences of the user, so long as that option is available to them. For example, accepting cookies through a pop-up. This outcome could be achieved in several ways. Currently, there is no such option on the TGA site. This is something that may be added in the future.

You said you use various ways to track user behaviour for personalisation. Can you tell us about your privacy considerations when you did this.

We take a privacy-first approach:

- No personally identifiable data is collected.

- User profile data is not shared outside of the user’s device.

- Client ID is not collected in analytics.

- Only a partial IP address is collected for analytics.

- Client ID and Item ID may be collected for tracking behaviour in the recommendation engine (not in play on the TGA site).

Is this an additional feature or element in the UI that works alongside more standard navigational features?

Yes. There are many ways that this may be implemented. We have settled on two main patterns for the TGA website.

- Show “task “ cards to users based on their audience, product type and service.

- Show “CTA” promotions to users based on their next best step (intention).

Other approaches could be taken but have not been.

- Customised messaging

- Filtered results.

Is TGA on Saas or PaaS?

SaaS. All of the features discussed have been implemented with client-side code and standard modules available on the SaaS platform.

Do you have an interactive or video example of this personalisation in action?

A POC demonstration was provided to the GovCMS community in 2022 which walked through all of this functionality. GovCMS may be able to share this video with you if you are a government member of the community. I would be happy to explain further to you if you would like to get in touch.

How do you dynamically update the homepage - most searched items?

The homepage shows the current items:

- Audience task cards: The 6 most relevant cards for the audience a user has.

- CTA promotion: The next best step for a user depends on their current intention.